Why Your AI Demo Works Perfectly (And Your Production App Doesn't)

By Prateek Sharma

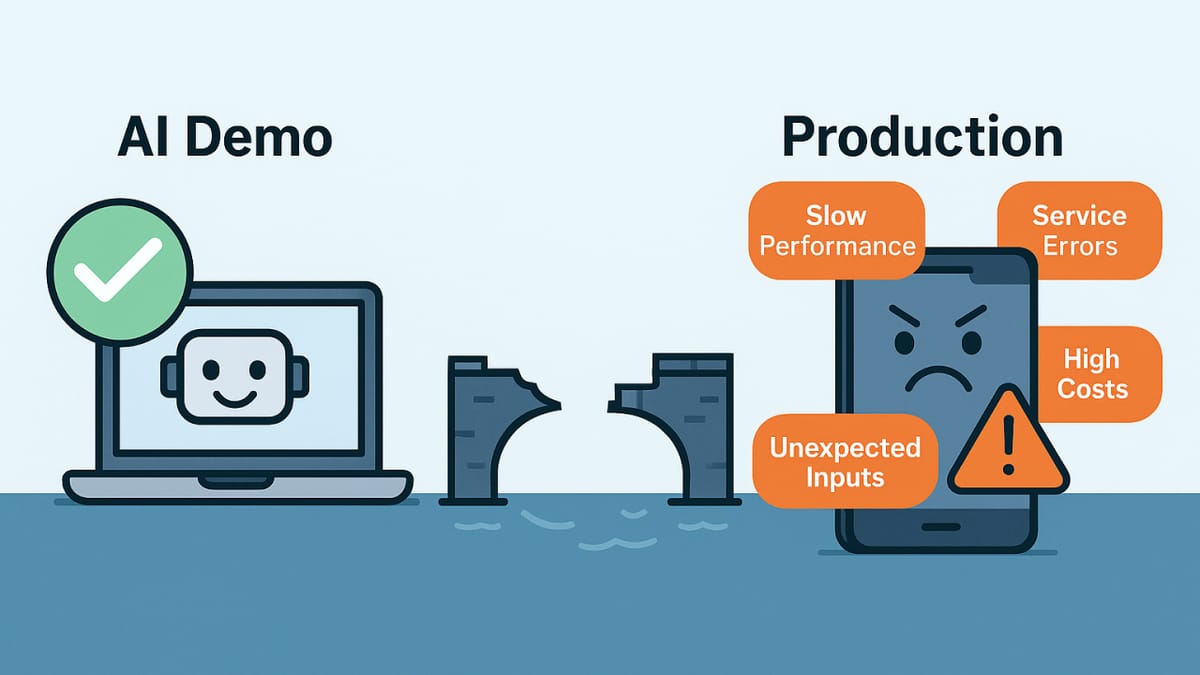

Let me tell you about a pattern I keep seeing. Someone shows me an AI demo—maybe it's an image recognition tool, or a clever chatbot, or something that analyzes drawings. It works beautifully. Clean interface, instant results, everyone's impressed. Then they try to actually launch it, and suddenly everything falls apart.

This happens way more often than people admit.

When Reality Hits

I've been building AI applications for a while now—everything from apps that analyze kids' drawings to tools that scrape social media data. And honestly? The demo is always the easy part.

Here's what actually happens in production: Your model, which ran smoothly on 50 test images, suddenly chokes when users upload 500 photos with unusual file formats. The API you're calling has rate limits you didn't account for. Response times that felt snappy in testing now feel glacially slow. And users? They do things you never imagined—upload sideways images, submit blank forms, or somehow manage to break your app in ways that shouldn't even be possible.

It's not just me. Talk to any developer who's actually shipped AI features, and they'll tell you the same story.

The Stuff Nobody Talks About

You know what takes up most of the time? Not the AI model itself—it's everything else. Error handling for when the model fails (and it will fail). Figuring out how to keep your app responsive when AI processing takes 10 seconds instead of 2. Managing costs to avoid waking up to a $1,000 API bill. Dealing with threading issues in mobile apps. Ensuring that everything still functions properly when your AI service goes down at 3 AM.

I recently worked on a mobile app that needed image analysis. Sounds simple, right? The AI part was actually straightforward. But then came image upload optimization, background processing, state management, handling network failures, and making sure the app didn't freeze while waiting for results. The AI was maybe 20% of the work.

What We Actually Need

Here's the thing: everyone's focused on making AI models smarter. That's great, but it's no longer the bottleneck. What we actually need are better tools for the messy middle—the infrastructure that connects "cool demo" to "things people can actually use."

We need frameworks that handle API orchestration without making you write a thousand lines of boilerplate. Fallback strategies that just work. Performance optimizations that don't require a PhD to implement. Integration patterns that play nicely with React, Swift, Python, or whatever you're using.

The Real Challenge

Look, AI is amazing. But the demo-to-production gap is real, and it's holding back many potentially great products. The companies and developers who figure out how to bridge this gap smoothly—they're the ones who'll actually change how people work and build things.

Because at the end of the day, nobody cares how impressive your demo is. They care whether your app works when they need it to.

Originally published on Protovate.AI

Protovate builds practical AI-powered software for complex, real-world environments. Led by Brian Pollack and a global team with more than 30 years of experience, Protovate helps organizations innovate responsibly, improve efficiency, and turn emerging technology into solutions that deliver measurable impact.

Over the decades, the Protovate team has worked with organizations including NASA, Johnson & Johnson, Microsoft, Walmart, Covidien, Singtel, LG, Yahoo, and Lowe’s.

About the Author